8:50—We’re 10 minutes or so out from the start of NVIDIA’s seventh annual GPU Tech Conference, kicked off by CEO Jen-Hsun Huang.

The first GTC took place in a set of hotel ballrooms a few blocks away. Today, we’re in the largest hall in the San Jose Convention Center.

We’re expecting a crowd of more than 5,000. That’s up from 4,000 last year, a growth rate that’s tracked pretty steady since the start of the show.

GTC 2016 Keynote

The stage is set: our GTC 2016 keynote is about to begin.

8:55—The room’s virtually all black. The stage is about five feet off the ground.

And on the vast screen is an NVIDIA-green moving image that, as it scans looks like a multi-level rendering of the brain’s neural network. With some electronics thrown in between.

8:58—There are a lot of media and industry analysts here. Upwards of 300 or so, a record for GTC. And we’re expecting probably 100 or so financial analysts and investors.

A great many of those here, though, are scientists and analysts of the computational sort — those who rely on NVIDIA GPUs to help them crunch the rising sea of data that’s engulfing us.

A lot are associated with universities, close to 200 of them. Virtually every one of the top 100 university comp sci departments are here.

There are also hundreds of companies represented—certainly the dozens of major web-services companies that use artificial intelligence. But also industrials, oil and gas, retail.

9:00—NVIDIA usually has cool warmup music for the room. Err, less so this time. But folks don’t seem to mind.

They’re waiting for Jen-Hsun who’s usually exceptionally prompt. Count on it. He’ll be in trademark black leather jacket, dark pants and have a great story to tell.

9:05—The music’s shifted a third time. That must mean we’re getting close. I have to admit. I’ve seen some of this already.

It’s going to be a great show, with a ton of news. We’ll do our best to keep up on the blog. But keep an eye on the NVIDIA Newsroom and blog, where we’ll be posting in real time.

9:08—We usually are prompt in getting things started but folks are still coming into the room.

There are a few overflow rooms, as well, which will be used if need be, which seems inevitable.

Okay, the music’s dying down. The so-called Voice of God has come up telling folks to sit down, quiet their phones, and settle in for the fireworks.

9:10—Things usually start off with a video, and there’s going to be one worth the price of admission…..

9:11—Each year, we kick off GTC with a video. Part tribute to researchers. Part inspiration. Part sheet entertainment.

This year, it’s playing out the magic and power of artificial intelligence. And in grand scale. The screen’s 100×15 feet. The resolution just a hair from 4K.

The video starts with a moment of deep blackness. Cue Peter Coyote. He’s a one-time counterculture figure who now sounds like a casual sophisticate. His voice demands adjectives associated with wine tasting.

He’s talking about a big-bang like spark of inspiration. One that triggers insights that lead to breakthroughs. First cut is to a rendering of the CERN collider doing research on the Higgs-Boson “God particle.”

9:12—Now, some cool examples from around the world of image-recognition – like identifying an elephant in the grass (okay, maybe not hard to locate if the grass isn’t that tall, but tricky if you’re a piece of software) and coral reefs. Our narrator notes that AI can allow scientists to do in a month what once took a decade.

Screen reveals more magic – Baidu Deep Speech 2 turning spoken Mandarin into text. And Horus Technology allowing the blind to see.

Now up: autonomous vehicles like Holland’s WEpod (you can already catch a ride on it if you’re in the low lands) and self-navigating drones for search and rescue. Next up: cool robots, including UC Berkeley’s BRETT, a self-learning robot enmeshed in what looks like a Montessori School exercise of fitting a block into a square hole. And, of course, Google DeepMind’s AlphaGO making fast work of the world’s most brilliant player of Go. That’s a game with more possible moves than there are atoms in the universe. Fun fact: The Go board animation here accurately recreates every move in Game Three of the five-game match (yep, AlphaGO won that one).

The message: The technologies that GTC is all about help the thousands of researchers and scientists in the room make breakthroughs that are changing the world.

9:14—Okay, with that opener about the collective imagination, fueled by by technology, Jen-Hsun Huang takes the stage.

The theme is “A New Computing Model.”

NVIDIA builds computing technologies, he said, for the most demanding computer users in the world. We build them for you.

Your work, he says, is done at such a gigantic scale. Your work with stakes so high, impact so great. Your work needs to be done within your lifetime.

The computers you need aren’t run of the mill. You need supercharged computing: GPU Accelerated Computing.

9:15—GTC is about GPU Computing to share your discoveries and reveal your innovation.

GTC is getting bigger than ever: GTC is 2x as large as 2012. But we’re doing them around the world now—in Japan, in Europe, and smaller ones elsewhere.

It’s essential for what you’re doing. So, we’ve dedicated NVIDIA to a singular craft: To advance craft so we can do amazing work. so we can do our work so well. you can do your work.

9:17—Supercomputer, high performance computing has doubled in two years time. 97 percnet of all high performance computers now include NVIDIA technologies.

“I want to tahnk you for adopting NVIDIA so you can do your work.”

He’s going to talk about five things: A tool kit, VR, Deep learning chip, deep learning box, deep learning in auto

Industries that come are broad and wide ranging – in 4 years time, CUDA developers have increased by a factor of 4x

9:21—First announcement is the NVIDIA SDK.

He calls it the essential resource for GPU developers. This SDK – that is, software development kit – has different flavors:

NVIDIA GameWorks—it includes technologies that make physics apply in games, that makes hair look realistic, that enables waves to really shimmer, and fire to flame like it does in true like. “Recreating the world is essential to great games,” Jen-Hsun says. There are subtleties that convince us that what we’re seeing is real – whether in shadows or dark or sunlight.

NVIDIA DesignWorks—this is to make photo-real graphics. Iray creates photorealistic images from designs so it’s not just beautiful but accurate. We have a new library called MDL which shows how real surfaces react in the real world—so carbon fiber, gold, brass, plastic all look the way they do in the real wold. Optix and Path Rendering allow other images to looks beautiful.

For more on today’s software announcements, see “NVIDIA Details Major Software Updates to Sharpen GPU Computing’s Cutting Edge“

NVIDIA's CEO delivering GTC 2016 keynote

NVIDIA CEO Jen-Hsun Huang delivering our GTC 2016 keynote.

9:29—ComputeWorks: CUDA is computing architecture of our GPU, it’s revolutionized accelerated computing and democratized high performance computing. On top of CUDA is a library called cuDNN, which allows neural net developers to create their frameworks to run as fast as possible. It lets you run DNNs 10-20x faster. Today, we’re rolling out nvGRAPH to show relational data so we can understand how large data plays out in a social-based graph. IndeX helps you wade through terabytes of data by simulating data and render the volumetric image. It’s the world’s largest visualization platform.

Jen-Hsun announces that CUDA 8 is coming, along with the latest version of cuDNN.

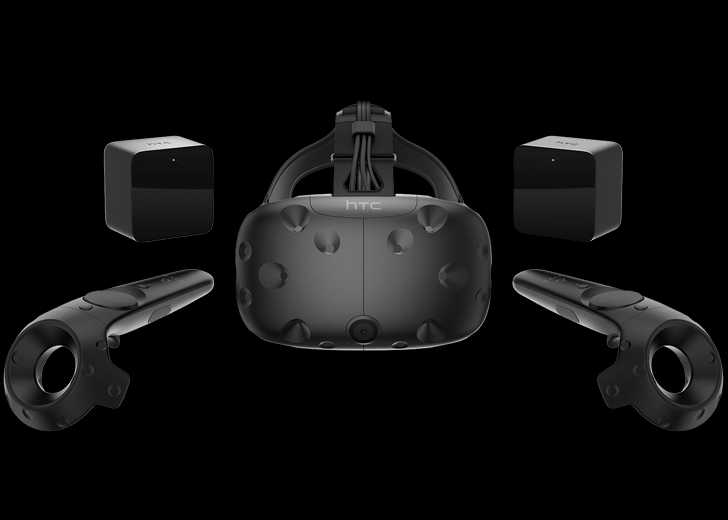

NVIDIA VRWORKS—lets you see graphics close up, without latency, which relates to the puke-factor (my words, not Jen-Hsun’s). This is integrated into game engines, head-mounted displays and the like.

NVIDIA DRIVEWORKS—it’s a suite of algorithms that allow car developers and startup to create self-driving cars. It’s still in development but accessible to a few partners at this point but more broadly available in Q1 2017.

9:30—Next one up is NVIDIA JetPack: this is for Jetson, our platform for autonomous machines like robots and drones. The GPU Inference Engine, or GIE, will be available next month for inferencing As a result Jetson TX1, our latest platform for autonomous vehicles, can process inferencing on 24 images per second per watt—an unprecedented level of energy efficiency. “This makes CUDA the highest performance and most energy efficient way to do deep learning.”

9:37—So, that’s it for the NVIDIA SDK, “a tool kit for you” (assuming you’re a developer)

We’re now on to VR—It’s not just a gadget. It’s a whole new platform. LCD displays were revolutionary, opening door to everyday graphics and mobility.

VR is just as powerful—the latest generation of head mounted displays are light with high resolution.

Video games are an obvious application—who wouldn’t want to be on the field of battle or chasing monsters. You can imagine that in VR. It’s also easy to see how VR will design how we design products – you can stand next to a car or building. But there are also cool things about VR, that can take you places you can only dream up. Places that are beyond your ability to reach. Surely, it will transform communications, as with Microsoft’s Hololens, so that someone who seems to be far away is literally standing before you.

Today, we’re going to take you to places you only dreamed up.

The first is Everest (which you can see at GTC’s VR Village—an area crammed full of VR demos (unlikely to find a village idiot there). This recreates Mount Everest, pixel by pixel and uses PhysX to simulate swirling snow

Jen Hsun nows shows a VR experience that brings Everest to the full 100-foot screen, all simulated, no movies here. It does look like a National Geographic special, but this is computer graphics. Real enough for you to worry about snow blindness, hypothermia and whether you need to eat lunch from foil packets. There are 108 Billion pixels in this experience.

9:44—There are other places where you can dream of going but can’t really go.

Jen-Hsun announces a VR experience of Mars, a reconstruction of eight square kilometers of Mars surface, from photos taken by NASA and other work.

Because this was made with NASA and Fusion Media, we’ve recreated the whole Mars experience that you can enjoy. The Rover is physically simulated, so it bumps the way it would on Mars, the lighting shows what it would be like in Mars. There’s even a lava tube that’s twice as tall as the Empire State Building—the only way to enjoy is VR.

Incredible recreation of Mars. He now shows this, although it’s all rendered, it looks like Matt Damon should pop up. Even the noise of the rover was recorded by driving the rover at the Johnson Space Center.

If we were to send someone to Mars, who would deserve first ticket. Who would be the most excited? There’s only one adult-child explorer who loves to do something no one has ever done—that’s Steve Wozniak, that is, the Woz, who co-founded Apple (and has a street named after him in San Jose, just a few blocks from the convention center)

Woz is shown on screen. He said a few weeks ago in an interview that he’d buy a one-way ticket to Mars if he could. So, why do you want to go to Mars, Jen-Hsun asks Woz? “Because it’s so hard”

“If it was possible to go, would you go?” Jen-Hsun asks. “What would be your profession?”

“I would,” Woz says. “My profession would be global marketing for Mars.”

9:46—“If you land, first thing you should do is find Matt Damon,” Jen-Hsun says.

Woz says first thing he’d do is dig out the letters “MOM” to say hello back down to Earth.

Woz straps on a head-mounted display. He’s now maneuvering around space and we can see the experience that Woz has, as Jen-Hsun thanks to Cisco for helping us beam all this back to the San Jose Convention Center.

Woz is looking for rover. “Whooaaa, I feel like I’m actually here. I’m entering the rover, Whooa, oh my God.”

“I’m going back to do my job,” Jen-Hsun says, wrapping up his conversation with Woz.

“Am I on Everest afterward,” Woz asks. “This is going to be incredible for whoever gets to do this.”

9:53—Jen-Hsun says that for product design, it’s got to be photoreal. This requires a whole new rendering technology. To get something photoreal, you need something that picks up every single photon, and how each photon interacts with surfaces.

In the case of design, it’s utterly important. So, we’re announcing Iray VR.

For more on today’s Iray VR announcement, see “NVIDIA Brings Interactive Photorealism to VR with Iray.“

We created a new technology that renders light probes throughout the room you want to interact with. They’re light probes that show how light would emanate from each spot, each is a 4K render, which takes an hour to render on eight GPUs. We need to render 100 probes, or 100 hours. We rasterized each scene from point of view of your eye. We chose best and mixed and processed it so it picks out the best from a combination of light probes.

With very low latency and our work with VRWorks, we can allow you to include Iray in VR.

“What’s amazing is that it’s beautiful, Jen-Hsun says, “But I want you to enjoy it here.”

Jensen shows a headmounted display that shows NVIDIA’s iconic new building, which is in the process of being built. It will be 500,000 square feet for 2,500 employees and filled with natural light. It’s a collection of intersecting triangles that are white and off gray. In real time, Jen-Hsun shows a 3D version of the building. Even crusty reporters are saying, “wow.”

9:55—This will be unbelievable for people doing architectural walk throughs and designing cars.

We want people to enjoy VR, Jen-Hsun says, irrespective of the computing device they have. So, we’ve also made Iray VR Lite for those who don’t have a supercomputer. It can’t render 3D as beautifully as Iray. But with a press of a button it creates a photosphere that’s completely ray-traced. You download Iray plugins and an Android viewer, like Google Cardboard. And we have this experience on the show floor at GTC.

10:03—We’re now shifting gears away from VR.

One of the biggest things that ever happened in computing is AI. Five years ago, deep learning began, sparked by the availability of lots of data, the availability of the GPU, and the introduction of new algorithms (see “Accelerating AI with GPUs: A New Computing Model“).

In past five years, there’s been one article after another announcing new breakthroughs in AI, thanks to deep learning. 2015 will be a defining year for AI, the computer industry and likely for all of humanity. This year marked something quite special. A few highlights:

ImageNet: Microsoft and Google were able to recognize images better than a human for the first time. Deep networks are now hundreds of layers deep, givingmachines superhuman capabilities

Researchers at Berkeley’s AI Lab built Brett – we saw him in the opening video – that was able to teach itself. The “tt” sounds for tedious tasks that it’s able to address, like hanging up clothes.

Baidu used one deep-learning network to train in two languages – Mandarin and English

Rolling Stone magazine wrote about Deep Learning – maybe it’s first dive into complex science

AlphaGo, by Google DeepMind, beat the world’s leading Go champion. When IBM Deep Blue beat Gary Kasparov at chess, scientists said it would take a century for that to happen with Go. This has happened in far less time. to do it, AlphaGo played itself millions of times to learn how to beat the greatest living champion.

What this says is computers powered by deep learning can do tasks that we can’t imagine writing software for. Deep Learning isn’t just a field or an app. It’s way bigger than that. So, our company has gone all in for it.

10:09—Jen-Hsun now describes how, in the final analysis, all deep learning is similar, though it can do profoundly different things.

Using one general architecture, one general algorithm, we can tackle one problem after another. In the old traditional approach, programs were written by domain experts. Now you have a general deep learning algorithm and all you need lots of data and lots of computing power.

In the ImagNet competition, a few years ago, computer vision alone was used. Now it’s all deep learning.

Jensen now shows an amazing slide called “The Expanding Universe of Modern AI”

It shows how modern AI gone from research organizations like Berkeley, NYU, and Carnegie Mellon, to expand into core technology frameworks, which is enabling AI as a platform through IBM Watson, Google and Microsoft Azure. These, in turn, have enabled AI startups – there are more than 1000, which have attracted more than $5 billion in funding. Deep learning has now it’s brought in industry leaders using AI – companies ike Alibaba, Audi, Bloomberg, Cisco, Ford, GE, and Massachusetts General Hospital.

10:13—Why is is that Deep Learning is becoming such a large hammer, like Thor’s Hammer? Deep Learning, is a concept that’s easy to apply. You can use frameworks like Google’s TensorFlow to train your own network.

Industry analysts say AI will be a big industry. It won’t be an industry, it’s a computing model. Some say $40B by 2020. John Kelly on IBM’s Watson team believes cognitive computing will be a $2 trillion industry. Our goal isn’t to have computers and be productive – it’s to have insight.

Speaking of the pervasiveness of AI… you have an example of its power in your pocket right now.

So fire up your smartphone or tablet during our keynote.. We won’t mind. Now take a look at your home screen.

Chances are our GPUs are powering more than a few of your favorite apps.

Microsoft uses GPUs to power cutting edge speech recognition apps. Shazam relies on GPUs to recognize that tune you’re hearing on the radio. Yahoo is using them to help sort through images uploaded from your smartphone to Flickr.

You can find more than enough examples to fill up the home screen on your smartphone.

If you want to know what’s next for GPU-accelerated computing, look no further.

The artificial intelligence boom (see “Accelerating AI with GPUs: The New Computing Model”) being driven by a new, GPU-powered technology known as deep learning has led to a new generation of apps that put the power of GPUs just a tap away for hundreds of millions of consumers.

10:20—“Tesla M40 is quite a beast. It’s a beast of a GPU accelerator for internet training. We have something smaller, an M4, for inferencing.”

There’s no reason to use FPGAs or use dedicated chips. Our GPU is not only energy efficient, it’s universal. You could use transcoding, image processing, as well as deep learning. These two products have become our fastsest growing business, adopted by internet service providers across the world.

Every internet service company would benefit. There’s every bit of evidence deep learning will be in every industry.

What’s really amazing: Deep Learning has been using one approach—supervised learning. This approach to training is laborious because the vast majority of the world isn’t labeled.

FAIR, Facebook’s AI Research lab, is using unsupervised research where you can just blast your network with a whole bunch of data, and it figures out what to.

Mike Houston, an NVIDIA AI researcher, joins Jen-Hsun on stage to describe how we’ve shoved 20,000 pieces of art into a neural network, all from the Romantic era. Having done that, we can now tell the computer to draw a landscape, and it draws one in the style of the art that it’s been shown. “It’s a neural network with artistic skills,” Jen-Hsun says.

I’m partial to pastoral images, Houston says. So, you can type in “pastoral,” and in shows something that’s between a farm and forest. You can ask it to draw a beach and that’s what shows up. You can take a sunset, ask the network to remove the clouds, remove the people and it generates new art altogether.

Jen-Hsun Huang delivering GTC 2016 keynote

Jen-Hsun Huang delivering GTC 2016 keynote

10:24—These supercomputers are being trained with so much data, they need to get much bigger. We need autonomous machines to study all the time and learn in real time.

As a result, we decided to go all in for AI, to design an architecture dedicated to accelerating AI.

There’s a big reveal coming. “The Most Advanced Hyperscale data center GPU every built.” It’s the Tesla P100.

It’s the first GPU built on NVIDIA’s 11th generation Pascal architecture. It’s built to blaze new frontiers for deep learning applications in hyperscale data centers. It’s got muscle—150 billion transistors, with 20 teraflops of half-precision performance.

For more on Pascal, see “Inside Pascal: Inside NVIDIA’s Newest Computing Platform.”

10:31—When he says 21.2 trillion teraflops at half precision, there’s broad applause.

There are 14 megabytes of register files.

Here’s what’s really amazing about the P100. We have rules in our company, Jen-Hsun says. You need to be thoughtful about the risks you take, he says, adding that we say no great project should never rely on three miracles. But this relies on five miracles, Jen-Hsun says.

First, is its architecture.

Second, NVLink, which delivers a 5x increase in interconnect bandwidth across multiple GPUs, or 160 gigabytes a second.

Third, its 16nm FinFET fab technology – this is world’s largest FinFET chip every built with 15 billion transistors and huge amounts of memory.

Fourth, is CoWoS with HBM2 Stacked Memory, which unifies processor and data in a single package – there are 4,000 wires connecting Pascal to all the memories around it.

And fifth, AI Algorithms.

This is the result of thousands of engineers working for several years. We’re willing to go all in on something on sheer belief, with so much uncertainty.

10:33—P100 represents giant leaps in many fields.

There’s a string of big names who are endorsing Pascal and the breakthroughs it will enable.

One of the biggest is the big dog of deep learning, Yann LeCun, director of AI Research at Facebook.

Baidu chief scientist, Andrew Ng, who keynoted at GTC last year, said, “AI computers are like space rockets. The bigger the better. Pascal’s throughput and interconnect will make the biggest rocket we’ve seen.”

Another is Microsoft’s Xuedong Huang, their chief speech scientist (and no relationship, by the way, to Jen-Hsun Huang)

Servers with P100 are being built by IBM, Hewlett Packard Enterprise, Dell and Cray

We’re in production now. We’ll ship it, “soon.” First it will show up in cloud and then in OEMs by Q1 next year.

NVIDIA's CEO Jen-Hsun Huang unveils NVIDIA DGX-1, the world's first deep-learning supercomputer

NVIDIA’s CEO Jen-Hsun Huang unveils NVIDIA DGX-1, the world’s first deep-learning supercomputer

10:39—We decided to build the most advanced computer that anyone has ever built. We decided to build NVIDIA DGX-1, the world’s first deep learning supercomputer.

It’s engineered top to bottom deep learning, 170 teraflops in a box, 2 petalops in a rack, with eight Tesla P100s.

It’s the densest computer node ever made.

“This is one beast of a machine”

To compare this to something everyday a dual xeon processor has 3 teraflops. This has 170 teraflops. With a dual Xeon it takes 150 hours to train Alexnet; with DGX -1 it takes two hours.

“What’s shocking is this: when you scale out, the communication between processors is such a larger burden,” Jen-Hsun says. “It would take 250 nodes to keep up with one DGX-1.

“It’s like having a data center in a box.”

“Last year, I predicted a year from now, I’d expect a 10x speed up year over year,” Jen-Hsun says. “I’m delighted to say we have a 12x speedup year over year.

The fastest computer a year ago let us cut cut down training from a month to 25 hours with four Maxwell GPUs. This year, it will take two hours of training time with eight Pascal GPUs.

10:46—Jensen brings out a Baidu researcher, who used to be with NVIDIA, Bryan Catanzaro, who he calls “my hero.”

“You’re not only gracious and graceful, but few people know as much about deep learning,” Jen-Hsun says.

“At Baidu we’re really excited about using Pascal and NVLink to train recurrent neural nets,” Bryan says. “At Baidu we care a lot about sequential problems, like speech.:

There are two major ways to parallelize neural nets, Bryan explains. One is model parallelizing (where you can assign different problems to different processors). The other is data parallelism (where a data set is so huge, you can chop it into pieces and assign different pieces to different processors). Pascal is going to really help out, he explains. Bryan then talks about what Baidu is working on now.

“When we combine model parallelism with persistent RNNs with data parallelism, we can use more copies of the model, enabling us to scale to more processors,” Bryan says. “We’re working with 32x bigger models.”

“We’re really excited about the possibilities of Pascal and NVLink,” he adds. “They’ll let us churn through more data and more quickly.”

Baidu's Bryan Catanzaro.

Baidu’s Bryan Catanzaro.

10:52—TensorFlow will democratize AI, Jen-Hsun explains. It will make it available for every industry, every researcher.

Now Jen-Hsun introduces Rajat Monga, TensorFlow technical lead and manager at Google.

Jen-Hsun explains that Rajat has told him that inside Google, in just a year, there was exponential growth in the use of AI accelerated deep learning. That it’s grown from 400 to 1200 applications.

“What’s cool is you open sourced it,” Jen-Hsun says. “That made it possible for us to start adopting TensorFlow for DGX-1 right away. “We took open source, and adopted it for DGX-1.”

Jen-Hsun asks Rajat about his hopes and dreams for tensor flow.

“We want it to work with all sorts of devices,” Rajat asks “We’d love to see the community try it out, push it into new territories.”

“TensorFlow will democratize deep learning” Jen-Hsun says. “That’s a huge contribution to humanity.”

10:57—Jen-Hsun says that DGX-1 will cost $129,000. Compared to that, 250 servers at $10K a piece would be $2.5 million, plus another $500,000 for interconnect between servers.

This draws applause.

So, who should get it first? It should be pioneers of AI – universities like Berkeley, NYU, Stanford, Montreal, Toronto, and the Swiss AI lab, Jen-Hsun says. “We’ve chosen them to be first recipients,” he adds.

I’m also super happy to announce that we’re joining in a venture with Mass General Hospital, Jen-Hsun says. We’ll be a founding technical partners of MGH’s Center of Clinical Data Science. Mass General nowhas 10 Billion medical images which could be used to enable deep learning capabilities for radiology. This will be extended, in time, to pathology and other initiatives, Jen-Hsun says.

10:59—The Tesla family now includes:

M40/M4 for hyperscale HPC

K80 for multi-application HPC

P100 for strong-scale HPC

DGX-1 for early adopters

11:06—Last thing: AI is coming to cars.

We’ll continue to follow the model of “Sense. Plan. Act,” Jen-Hsun explains, to help cars drive themselves.

So, we’ve decided to build a car computer for this, the NVIDIA PX .

This is world’s first Deep Learning-powered car computing platform. It’s one scalable architecture, from DNN training to cluster, infotainment, ADAS (advanced driver assistance), autonomous driving and mapping. And it’s an open platform.

Last time I showed you, we’d been working on object recognition for our own system. We’ve now achieved the number one sore on KITTI, the self-driving industry benchmark. Also, those in places 2-8 are all GPU accelerated. This will make cars and streets safer and change the way we design cities.

We can detect cars in the front, in back, in the side, Jen-Hsun explains. You can detect objects all around you while running the smallest version of DRIVE PX, which is about the size of Hershey candy bar, and can process 180 frames per second.

We can also make sure you can map. You should be able to use cameras with photogrammetry, or structure from motion, and Lidar to help map the world around you.

Jen-Hsun now shows an image of Baidu’s self-driving car computer. They built a super-computing cluster that fits in the trunk of a car.

We took all of this horsepower and shrunk it into DRIVE PX2 with two Tegra processors and two Pascal next-gen processors. This four chip configuration will give you a supercomputing cluster that can connect 12 cameras, plus lidar, and fuse it all in real time.

You stream up point clouds, which are accumulated and compressed in the cloud to create one big high definition map. This will also be a mapping platform. So you’ll have DRIVE PX 2 in the car and DGX-1 in the cloud.

For more about what we’re doing with high-definition mapping, see “Beyond GPS: How HD Maps Will Show Self-Driving Cars the Way.”

11:11—Our technology can track 15,000 important points per second per camera. It Can collect 1.8 million points a second. We load this into sky, register it and calibrate it using DGX-1.

We created this so every mapping company in the world can map cars – companies like Here, TomTom, Zenrin. We need to map the world so cars can drive safely.

Let me show you something else, Jen-Hsun says.

We’ve been working for some time to create an alternative approach, like Brett the robot, which learns by itself. It learns what action it needs to take.

We’ve been working on a project with “BB8,” one of our three autonomous cars.

It runs our deep-learning network, which we call DAVENET – named an earlier NVIDIA robot we showed up at last year’s keynote speech.

Jen-Hsun shows a video filled with bloopers of almost BB8 swerving into trees, over cones, and nearly running into signs. But after three weeks of training, it maneuvers far better on the New Jersey Turnpike, and across roads without lanes.

“You saw what happened,” Jen-Hsun says. “DAVENET figured out how to drive without lanes, in the rain, in the fog… as we continue to drive, this car will take on superhuman capabilities.”

11:14—Jen-Hsun winds up by showing the world’s first autonomous race car. It’s designed by Daniel Simon, a legendary car designers. It weighs 2,200 pounds.

This is for the first global autonomous racing series – ROBORACE. There will be 10 teams, with 20 identical cars. And DRIVE PX2 will be the brain of every car. It will be part of 2016/17 racing season.

That’s it. Jen-Hsun says.

We talked about five things today:

The NVIDIA SDK;

Iray VR;

Telsa P100—the most advanced GPU every made for hyper-scale data centers;

NVIDIA DGX-1—which puts the power of 250 servers in a box for just $129,000;

and HD mappng and AI driving.

The audience applauds. They’re a bit staggered by the volume of news, I think it’s fair to say

For more on our role in the world’s first racing series for autonomous vehicles, see “Go, Autonomous Speed Racer, Go! NVIDIA DRIVE PX 2 to Power World’s First Robotic Motor Sports Competition.”

GTX 2080ti / Win10 / Dk1 > Dk2 > CV1 / VIVE / PSVR / odyssey / GO / Daydream / lenovo mirage Camera / 3,60 X 2 / Volante / Hotas / Cockpit / Agitaculos